Prerequisites

The AI agent feature is only available in a Kubernetes setup.

To use PAS AI agents, you need an account with an AI service provider that is not provided by Scheer PAS. Currently, OpenAI and Mistral are supported as providers. You can also use other LLMs if they are hosted by Microsoft Foundry.

“Testing your AI agent” actually means testing the prompts. Testing your prompts is absolutely crucial and you should pay particular attention to testing during the development of your AI agent.

In agent-based AI, the prompt is the program. Therefore, the agent will only do the right or expected thing if the prompt is unambiguous. If the prompt is vague or flawed, the agent might hallucinate, fail, or cause harm. Testing is therefore essential and helps you to improve your prompt iteratively.

Using Tab “Testing”

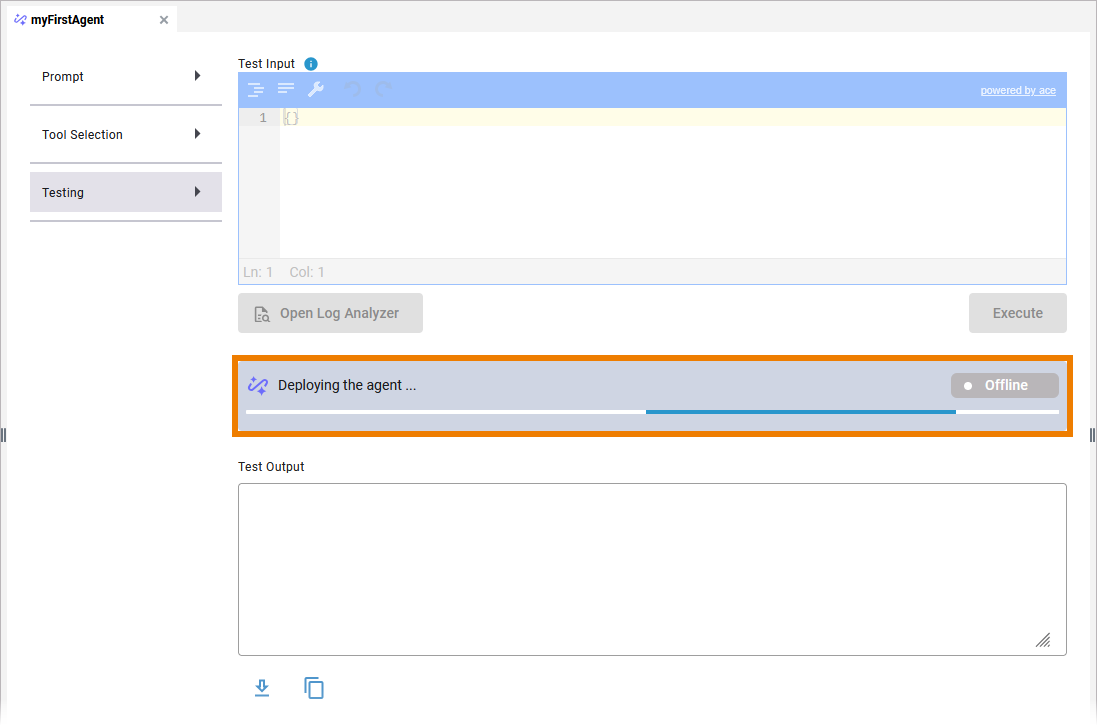

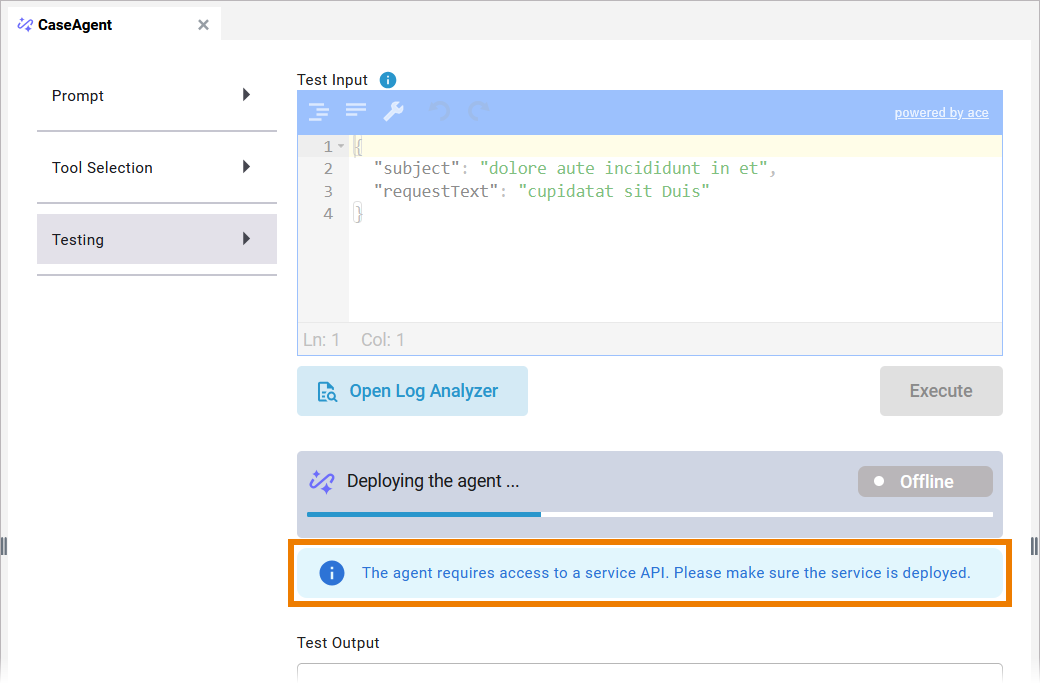

If you have filled all necessary configuration in the Prompt tab, the Testing tab is available. The progress bar shows you the status of the AI agent:

Caution!

Be careful when making changes to AI agents that are already used in productive services.

The deployment of an AI agent is triggered:

-

when you reopen the Testing tab.

-

when you deploy the service.

If you are using service APIs as tools for your AI agent (refer to Creating an AI Agent > Using Service APIs), a note is displayed to remind you that the APIs are only available if the service is deployed:

In addition to the progress bar, you will find the following functions in tab Testing:

|

Function |

Description |

|

|---|---|---|

|

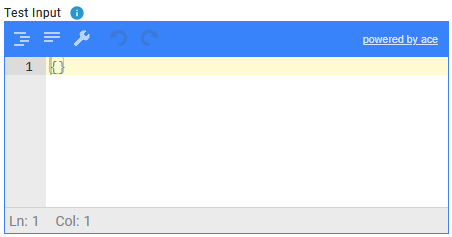

Test Input |

|

Enter your test input here. It should match the structure defined in the User Prompt. The field is pre-populated with the data model (input) linked to the agent instance. The goal of the test input is to verify the behavior of the code, e.g. whether the correct result is returned, errors are handled correctly, edge cases are covered. Check whether your input works in conjunction with your prompt and tool selection. |

|

Execute |

|

Using this button

|

|

Open Log Analyzer |

|

Use this button to open the AI agents’s logs within the Log Analyzer. If you are using tools via an MCP server, the log displays the tool calls and the input parameters sent to the tools. Use the log for debugging purposes and to check whether the tool is functioning as intended. Refer to Analyzing Logs in the Administration Guide for details about the Log Analyzer. |

|

Test Output |

|

In this section, the output for your test data is displayed. The text box is read-only. |

|

Download |

|

Use this option to download the test output as a .txt file. |

|

Copy to clipboard |

|

Use this option to copy the test output to the clipboard. |

Tips & Tricks for AI Agent Testing

Testing AI prompts effectively requires systematic methods to ensure consistency, accuracy, and robustness. The following tips and tricks help you to test your AI prompts:

General Principles

(1) Define clear objectives: Are you testing for correctness, tone, safety, creativity, brevity...?

(2) Use a test suite of diverse prompts: You can include, e.g.

-

“Happy path” (expected use)

-

Edge cases (extreme inputs)

-

Ambiguity (e.g. double meanings)

-

Malicious inputs (e.g. prompt injection attempts)

-

Domain-specific scenarios (if applicable)

(3) Benchmark against a baseline

Use a known good response or a simpler version of your prompt to compare the performance of a new one.

Techniques for Prompt Testing

-

Prompt A/B Testing: Run multiple variations of a prompt on the same input and compare outputs. This is ideal for optimizing tone, clarity, or helpfulness.

-

Few-shot vs. Zero-shot Comparison: Test both approaches to see if few-shot examples improve reliability or introduce bias.

-

Automate evaluations with test harnesses: Use scripts to run batches of prompts and log outputs. Tools: LangChain's evaluation tools, PromptLayer, or custom scripts.

-

Use Human-in-the-loop (HITL) testing: Gather human feedback for subjective metrics like tone, helpfulness, or engagement.

-

Test with real (anonymized and safe) user data: Simulate live usage by using actual queries or logs if available.

-

Stress-test the prompt: Feed long, contradictory, or nonsense input to see how the agent handles confusion.

Tricks for Prompt Crafting During Testing

-

Label sections in your prompt explicitly.

This helps the AI to understand structure, e.g.

[INSTRUCTION]: ...

[CONTEXT]: ...

[OUTPUT FORMAT]: ...

-

Version control your prompts.

-

Maintain a changelog of prompt iterations and their results. This helps with reproducibility.

-

Structure prompts using template engines to make prompt testing scalable.

-

-

Give examples for complex instructions.

Few-shot examples can boost understanding when tasks are nuanced. -

Test prompt modularity.

Break complex prompts into reusable components and test them individually. -

Use AI to critique AI.

Run the output through another prompt that scores or critiques it. -

Compare to known ground truth.

In tasks like math, coding, summarization, and classification, use correct answers to compute accuracy. -

Check for hallucinations.

Ask follow-up questions like: "Which part of your answer is backed by facts?" to test for false claims.

AIAgent_InsuranceCase_Example

Click here to download a simple example model that shows how use an AI agent to process unstructured data with Scheer PAS Designer.

The AI Agent feature is only available on Kubernetes. To execute this example, you need your own API key from OpenAI or Mistral.

You can also use other LLMs if they are hosted by Microsoft Foundry.

Related Content

Related Documentation: