Prerequisites

The AI agent feature is only available in a Kubernetes setup.

To use PAS AI agents, you need an account with an AI service provider that is not provided by Scheer PAS. Currently, OpenAI and Mistral are supported as providers. You can also use other LLMs if they are hosted by Microsoft Foundry.

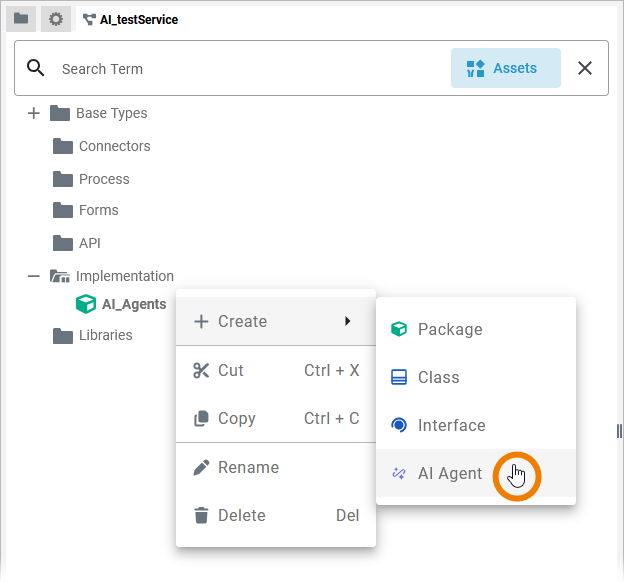

You can create a new AI agent within a Package in the Implementation folder of the service panel. Open the context menu option Create and select AI Agent:

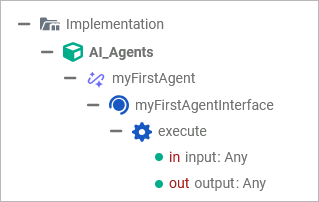

The AI agent is created with all its necessary elements:

-

an interface

-

an execute operation

-

an input parameter of type any

-

an output parameter of type any

Do not rename or delete the standard operation execute or its input and output parameters.

You need to adapt the type of the input and output parameters to match your service implementation. By default, they are of type Any, but both parameters must be of a complex type. We recommend to create helper classes for the AI agents in the Implementation folder that contain the necessary properties for the agent. You can adapt the type in the attributes panel of the corresponding parameter (refer to Attributes Panel > Changing the Type of an Element for details).

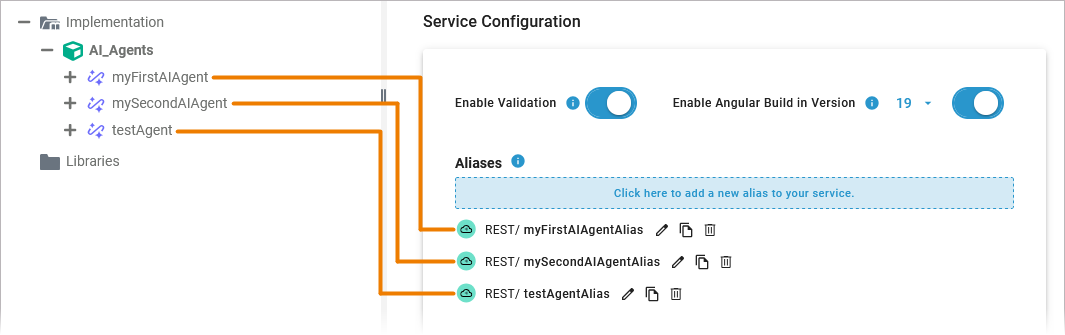

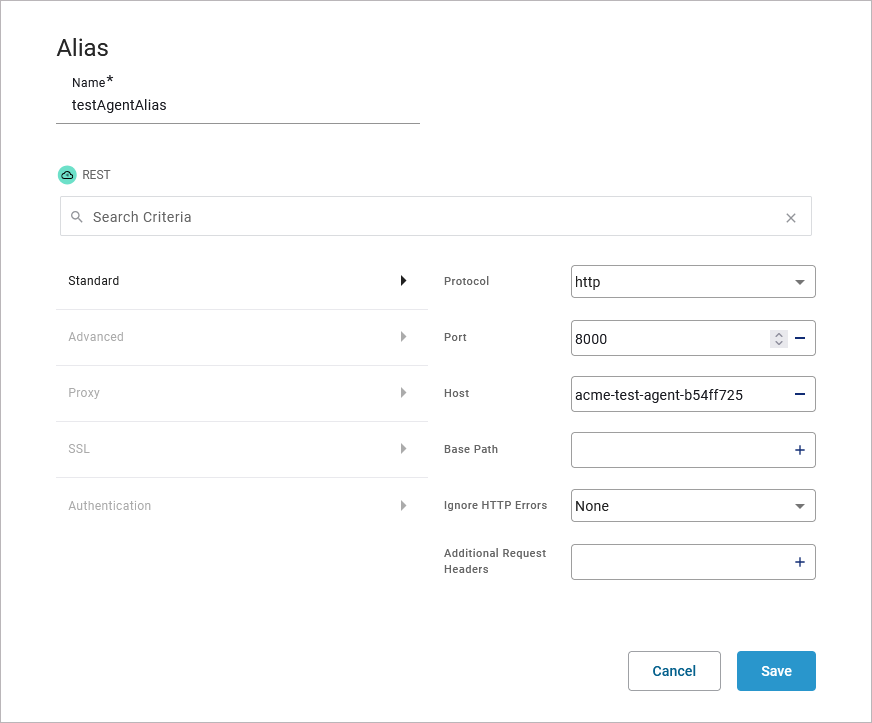

The default execution operation is a REST call. Therefore, a corresponding REST alias is automatically created for each AI agent:

To make your work easier, the alias configuration is already filled in:

Configuring the AI Agent

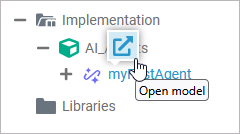

Hover over the AI agent name and use option Open model to open the AI agent’s configuration:

The configuration page consists of the following sections:

-

Testing (available when configuration is complete)

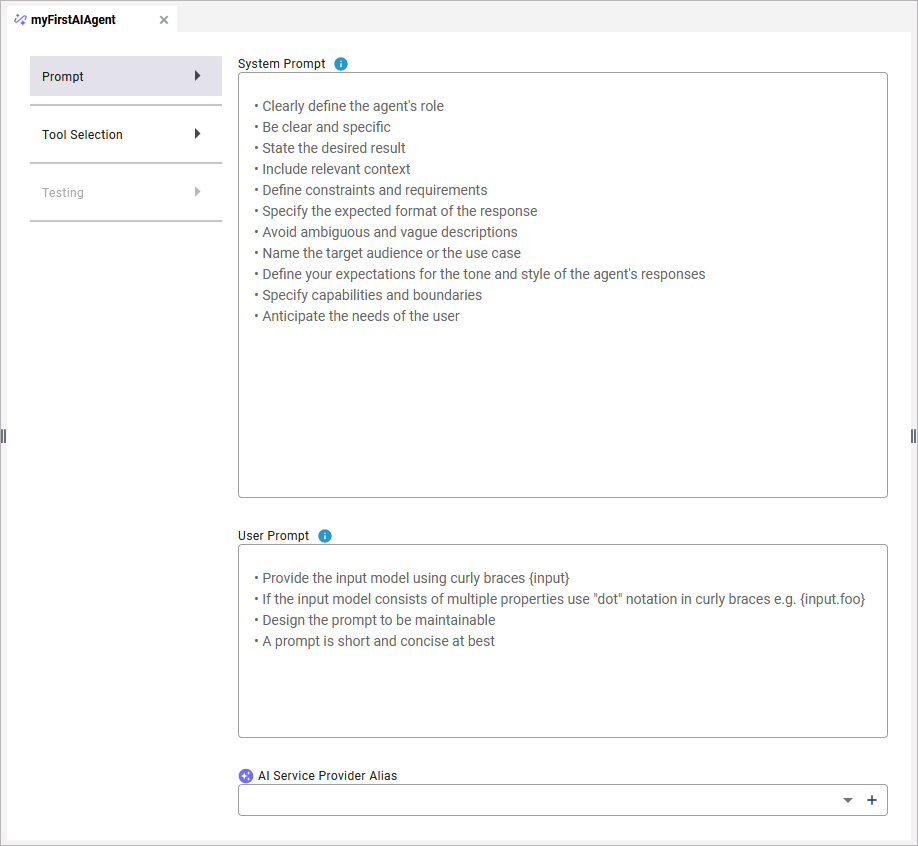

Tab Prompt

The prompts contain the essential information that brings your AI agent to life. Therefore, you should carefully craft and structure your prompts. The prompt editors contain helpful tips to support you in writing good prompts:

The system prompt and the user prompt work together to generate the final output:

-

The system prompt is the most important one: It defines what the AI agent's job is.

-

The user prompt guides what the AI talks about in the specific interaction. Fill it with a clear and precise task referencing the input model. Use use curly braces to reference input properties.

The System Prompt

The system prompt is essential to ensure the AI functions appropriately, reliably, and safely in a given context. It directly influences the tone, style, content, and boundaries of the AI’s responses.

An AI agent needs a system prompt to define its behavioral framework and role. It’s essentially the AI’s "internal instruction manual", telling it how to respond to input.

The system prompt is usually written by the developer and hidden from the end user.

It tells the AI:

-

Who it is (e.g. a helpful assistant, a coach, a salesperson, etc.)

-

How it should communicate (formal, casual, factual, empathetic, etc.)

-

What it is allowed or not allowed to do

-

What goals it should pursue

Without a system prompt, the AI wouldn’t know:

-

What kind of answers are expected

-

Whether to be brief or detailed

-

Whether it can be creative or must strictly stick to facts

-

Whether it has access to tools or external data

-

How to handle sensitive topics

The User Prompt

The user prompt is the input that an end user gives to your AI agent. The user prompt tells the AI what the user wants, e.g. an answer to a question, a request to a database, help with a task, or something else. Unlike a system prompt, a user prompt is a short instruction for the agent with a very precise message. Technically speaking, the user prompt is the input that an end user sends to the AI agent instance via the created REST alias.

The user prompt triggers the AI to generate a response.

The AI then looks at the following to figure out the best possible reply:

-

The content of the user prompt (your text as well as dynamic input parameters set to the agent and used as {input} inside the user prompt)

-

The context of the conversation

-

Its instructions from the system prompt (how it’s supposed to behave)

A well-crafted user prompt can help you get better, more useful answers, so:

-

Be specific: “Write a 3-sentence summary.” instead of “Summarize the email content.”

-

Add context: Give some background information or describe a scenario.

-

Give format hints: “Make it sound like a conversation”

Prompt Examples

|

System Prompt |

User Prompt |

|---|---|

|

You are a mathematics professor. The user sends you a math question, you work on it and give the user an answer in the desired data model. |

Generate a random number between {input.min} and {input.max}. |

|

You are an experienced agent who creates beautiful HTML-based reports for a specific user and their activities. The report should then be sent to the specified user. If the user has no recent activities for the requested period, send them an email with the text “No recent activities found for the requested period”. If the requested period is 0, send an email stating that the specified period is invalid. |

Generate a report for the user {input.email} activities for the last recent {input.range} days. |

AI Service Provider Alias

In this field, you need to select the alias for the AI service provider you want to use. Currently, OpenAI and Mistral are supported providers. You can use other LLMs if they are hosted in an Azure environment.

Please note that you need your own AI service provider account. This is not provided by Scheer PAS. The general terms and conditions and privacy policy of the selected provider apply.

You can either select an existing alias from the selection list or or create a new one by using option +. Refer to Aliases for more details about the creation of aliases.

You can find an overview on all necessary settings to create an AI service provider alias (for OpenAI or Mistral models) on page AI Service Provider Reference.

If you are using an LLM hosted by Microsoft Foundry, refer to Microsoft Foundry AI Service Provider Reference.

Tab Tool Selection

On their own, AI agents are limited in what they can do. For example, LLMs can’t directly send emails, book appointments, control apps, or interact with databases.

But you can provide tools to your AI agent that support him to execute his task(s). For example, tools can act as a bridge between the AI and real-world systems, making it possible to take actions, not just provide advice.

Think of it like this: The AI model is the brain while the tools are the hands, eyes and ears. Together, they make the AI more like an agent that can not only talk, but observe, think, and act.

We offer three different ways to equip your AI agent with tools:

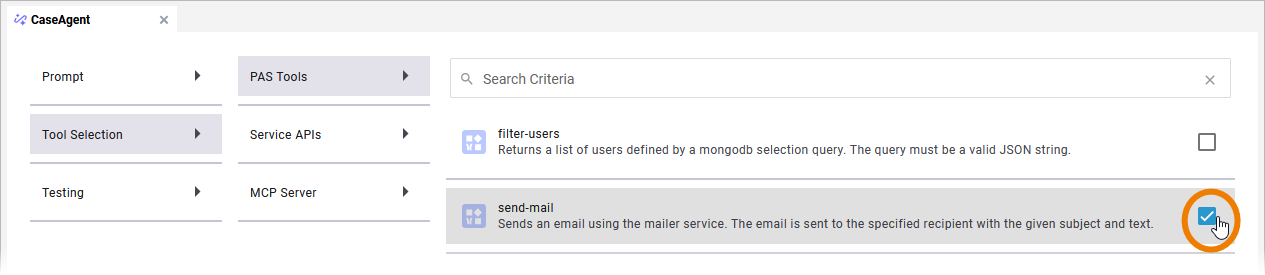

Using PAS Tools

Scheer PAS provides some platform-related tools. Available tools are listed in tab PAS Tools. Just click on a tool to select it. It is then available for usage in the AI agent.

Example: You select the send-mail tool.

In the system prompt, you can now use a phrase such as “Send an email to me@example-address.com with the content ‘Hello world’”.

The AI agent will automatically select the send-mail tool to execute this task.

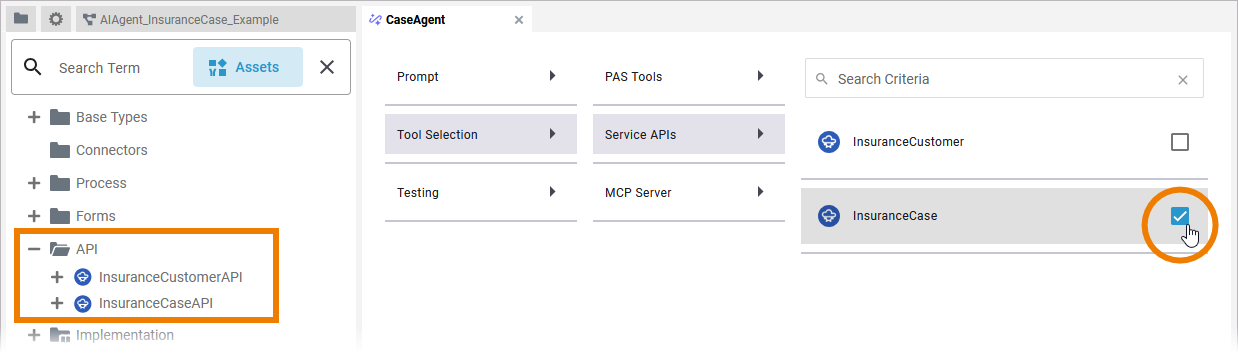

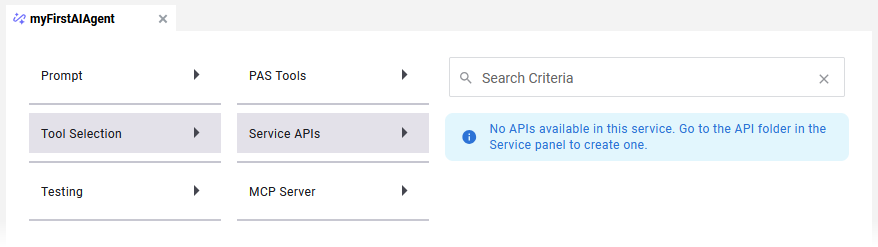

Using Service APIs

You can also provide an AI agent with your own service APIs as tools (refer to Modeling APIs for more information). Just click on a service API to select it. It is then available for usage in the AI agent:

You have to deploy the xUML service to make the API available for the AI agent.

The AI agent needs the information on what your service API does. Add helpful explanations in the Description field in the attributes panel for each API operation and its parameters.

If you have not created any APIs in your service, the Service APIs tab will remain empty and only display a corresponding message:

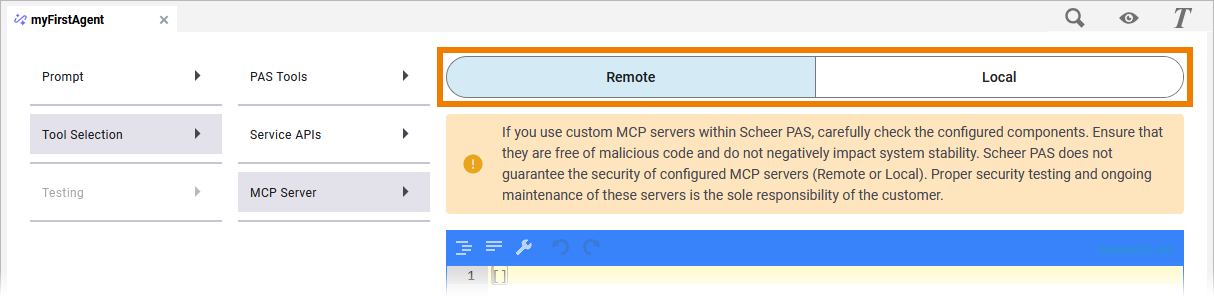

Using an MCP Server

If you want to use tools that are provided by an MCP server, you can configure the access to the desired MCP server here.

If you use custom MCP servers within Scheer PAS, carefully check the configured components. Ensure that they are free of malicious code and do not negatively impact system stability. Scheer PAS does not guarantee the security of configured MCP servers (remote or local). Proper security testing and ongoing maintenance of these servers is the sole responsibility of the customer.

You have two options: Remote (default) and Local.

|

|

Remote (default) |

Local |

|---|---|---|

|

Description |

Connect to an MCP server that is situated outside of your infrastructure. The first connection attempt is made using type Streamable HTTP transport. If the connection attempt fails, the outdated type HTTP with SE is used for a retry. If both connection attempts fail, the agent will not start. In that case check the agent’s logs for troubleshooting. |

Connect to an MCP server that is situated inside of your infrastructure. The server will be downloaded and executed isolated in the AI agent. Only MCP servers installed via the npx or uvx command are currently supported. |

|

How to connect |

Go to the page of the MCP server provider and follow the developer instructions. Enter the necessary JSON object into the provided editor. For authentication on the remote MCP servers, the following methods are supported:

|

Go to the page of the MCP server provider and follow the developer instructions. Enter the necessary JSON object into the provided editor. It usually consists of:

You can enter several MCP servers in an array object. |

|

Example |

|

JSON

|

Expert Advice

Various lists of official integrations that are maintained by companies building production-ready MCP servers for their platforms are circulating on the Internet, e.g. on github.

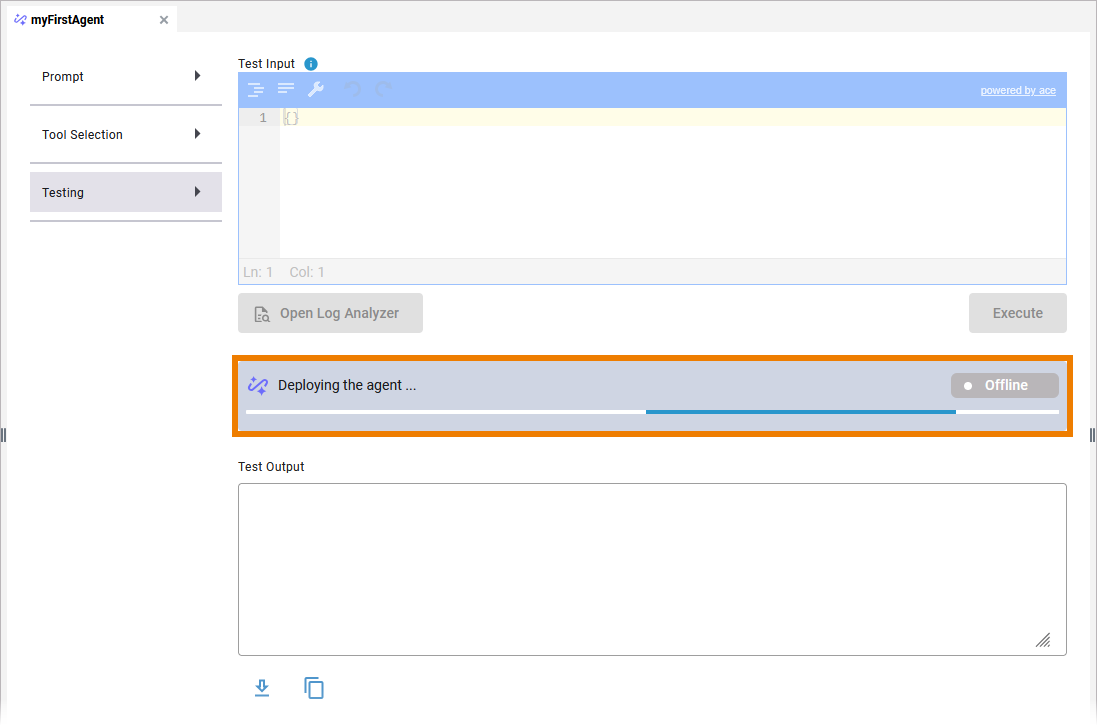

Tab Testing

If you have filled all necessary configuration in the Prompt tab, the Testing tab is available. The progress bar shows you the status of the AI agent:

Caution!

Be careful when making changes to AI agents that are already used in productive services.

The deployment of an AI agent is triggered:

-

when you reopen the Testing tab.

-

when you deploy the service.

Testing the AI agent, or rather its prompts, is an important step during development. Refer to Testing an AI Agent for more details about testing and the contents of the Testing tab.

AIAgent_InsuranceCase_Example

Click here to download a simple example model that shows how use an AI agent to process unstructured data with Scheer PAS Designer.

The AI Agent feature is only available on Kubernetes. To execute this example, you need your own API key from OpenAI or Mistral.

You can also use other LLMs if they are hosted by Microsoft Foundry.

Related Content

Related Documentation: